Section: New Results

Vocabularies, Semantic Web and Linked Data based Knowledge Representation

Semantic Web Technologies and Natural Language

Participants : Serena Villata, Elena Cabrio.

Together with Sara Tonelli (FBK, Italy) and Mauro Dragoni (FBK, Italy), we have presented the integration, enrichment and interlinking activities of metadata from a small collection of verbo-visual artworks in the context of the Verbo-Visual-Virtual project. We investigate how to exploit Semantic Web technologies and languages combined with natural language processing methods to transform and boost the access to documents providing cultural information, i.e., artist descriptions, collection notices, information about technique. We also discuss the open challenges raised by working with a small collection including little-known artists and information gaps, for which additional data can be hardly retrieved from the Web. The results of this research have been published at the ESWC conference [37].

Together with Vijay Ingalalli (LIRMM), Dino Ienco (IRSTEA), Pascal Poncelet (LIRMM), we have introduced AMbER (Attributed Multigraph Based Engine for RDF querying), a novel RDF query engine specifically designed to optimize the computation of complex queries. AMbER leverages subgraph matching techniques and extends them to tackle the SPARQL query problem. AMbER exploits structural properties of the query multigraph as well as the proposed indexes, in order to tackle the problem of subgraph homomorphism. The performance of AMbER, in comparison with state-of-the-art systems, has been extensively evaluated over several RDF benchmarks. The results of this research have been published at the EDBT conference [39].

Semantic Web Languages and Techniques for Digital Humanities

Participants : Catherine Faron-Zucker, Franck Michel, Konstantina Poulida, Safaa Rziou, Andrea Tettamanzi.

In the framework of the Zoomathia project, we conducted three complementary works, with the ultimate goal of exploiting semantic metadata to help historians in their studies of knowledge transmission through texts. First, together with Olivier Gargominy and other MNHN researchers, and Johan Montagnat (I3S, UNS), we continued a work initiated last year on the construction of a SKOS (Simple Knowledge Organization System) thesaurus based on the TAXREF taxonomical reference, designed to support studies in Conservation Biology [73]. We deployed the Corese Semantic Web factory as a backend to publish this SKOS thesaurus on the Web of Linked Open Data. This work was presented at the SemWeb.Pro 2016 conference.

Second, together with Irene Pajon (UNS) and Arnaud Zucker (UNS), we continued a work initiated last year on the construction of a SKOS thesaurus capturing zoological specialities (ethology, anatomy, physiology, psychology, zootechnique, etc.). This thesaurus was constructed while manually annotating books VIII-XI of Pliny the Elder’s Natural History, chosen as a reference dataset to elicit the concepts to be integrated in the Zoomathia thesaurus. This work has been published in the ALMA journal [79] (Archivum Latinitatis Medii Aevi).

Third, together with Arnaud Zucker (UNS), we developed an approach of knowledge extraction from ancient texts consisting in semantically categorizating text segments based on machine learning methods applied to a representation of segments built by processing their translations in modern languages with Natural Language Processing (NLP) methods and by exploiting the above described thesaurus of zoology-related concepts. We applied it to categorize Pliny the Elder’s Natural History segments. The above describe manually annotated dataset served us as goldstandard evaluate our approach. This work has been presented at the ESWC 2016 workshop on Semantic Web for Scientific Heritage [38].

Relatedly, together with Emmanuelle Kuhry (UNS) and Arnaud Zucker (UNS), we developed an approach which originates in seeing copying as a special kind of ”virtuous” plagiarism and consists in paradoxically using plagiarism detection tools in order to measure distances between texts, rather than similarities. We first applied it to the Compendium Philosophie's tradition, whose manuscript tradition is well studied and mostly understood and can therefore be considered as a gold standard. Then we applied the validated and calibrated method to investigate the Physiologus latinus's tradition, which is a complex manuscript tradition for which our knowledge is much less sure, with the aim of supporting the elaboration of stemmatological hypotheses.

Argumentation Theory and Multiagent Systems

Participants : Andrea Tettamanzi, Serena Villata.

Together with Célia da Costa Pereira (I3S, UNS) we have proposed a formal framework to support belief revision based on a cognitive model of credibility and trust. In this framework, the acceptance of information coming from a source depends on (i) the agent's goals and beliefs about the source's goals, (ii) the credibility, for the agent, of incoming information, and (iii) the agent's beliefs about the context in which it operates. This makes it possible to approach belief revision in a setting where new incoming information is associated with an acceptance degree. In particular, such degree may be used as input weight for any possibilistic conditioning operator with uncertain input (i.e., weighted belief revision operator). The results of this research have been published at the SUM conference [56].

Moreover, together with Célia da Costa Pereira (UNS) and Mauro Dragoni (FBK, Italy), we have provided an experimental validation of the fuzzy labeling algorithm proposed by da Costa Pereira et al. at IJCAI-2011 with the aim of carrying out an empirical evaluation of its performance on a benchmark of argumentation graphs. Results show the satisfactory performance of our algorithm, even on complex graph structures as those present in our benchmark. The results of this research have been published at the SUM conference [55].

Serena Villata, together with the other organizers, has also reported about the results of the first Computational Argumentation Challenge (ICCMA) in a AI Magazine paper [17].

RDF Mining

Participants : Amel Ben Othmane, Tran Duc Minh.

In collaboration with Claudia d'Amato of the University of Bari, Italy, we have carried on our investigation about extracting knowledge from RDF data, by proposing a level-wise generate-and-test [53] and an evolutionary [54] approach to discovering multi-relational rules from ontological knowledge bases which exploits the services of an OWL reasoner.

LDScript Linked Data Script Language

Participants : Olivier Corby, Catherine Faron-Zucker, Fabien Gandon.

We design and develop LDScript, a Linked Data Script Language [68]. It is a DSL (domain-specific programming language) the objects of which are RDF terms, triples and graphs as well as SPARQL query results. Its main characteristic is to be designed on top of SPARQL filter language in such a way that SPARQL filter expressions are LDScript expressions. Mainly speaking, it introduces a function definition statement into SPARQL filter language. The main use case of LDScript is the definition of SPARQL extension functions and custom aggregates. With LDScript, we were able to develop a W3C DataShape SHACL (https://www.w3.org/TR/shacl/) validator using STTL and we provide a Web service (http://corese.inria.fr).

Ontology-based Workflow Management Systems

Participants : Tuan-Anh Pham, Nhan Le Thanh.

The main objective of the PhD work is to improve Coloured Petri Nets (CPNs) and Ontology engineering to support the development of business process and business workflow definitions of the various fields and to develop a Shared Workflow Management System (SWMS) using the ontology engineering. Everybody can share a semi-complete workflow which is called ”Workflow template”, and other people can modify and complete it to use in their system. This customized workflow is called ”Personalized workflow”. The challenges of a SWMS is to be simple, easy to use, friendly with the user and not too heavy. But it must have all functions of a WMS. There are three major challenges in this work: How to allow the users to customize the workflow template to correspond to their requirements, but with their changes compliant with the predefined rules in the workflow template? How to build an execution model to evaluate step by step a personalized workflow?

A Service Infrastructure Providing Access to Variables and Heterogeneous Resources

Participants : The-Cân Do, Nhan Le Thanh.

This work is done together with Gaëtan Rey (I3S, PhD co-director). The aim of this PhD work is to develop an adaptation of applications to their context. However, in view of the difficulties of context management in its entirety, we choose to approach the problem by decomposing context management from different points of views (or contextual concerns). A concern (or point of view) may be the business process of the application, security, etc. or any other cross-functionality. In addition to simplifying the context management, sharing between different experts the analysis to be performed, this approach aims to allow the reuse of specifications of each point of view between different applications. Finally, because of the independence of points of view (from their specification to implementation), it is easily conceivable to add and/or delete dynamically points of view during the execution of the application we want to adapt. The scientific challenge of this thesis is based on the automatic resolution of conflicts between the points of view made to the adaptation of the target application. Of course, this must be done at runtime.

DBpdia.fr & DBpedia Historic

Participants : Raphaël Boyer, Fabien Gandon, Olivier Corby, Alexandre Monnin.

A new version of the DBpedia historic extractor has been developed and the database is publicly accessible on a dedicated Web server footnote http://dbpedia-historique.inria.fr/sparql. We redesigned the DBpedia Live mechanism from the international DBpedia community to deploy a DBpedia live instance that is able to update itself in near real time by following the edition notification feed from Wikipedia; it is available on our server (http://dbpedia-live.inria.fr/sparql).

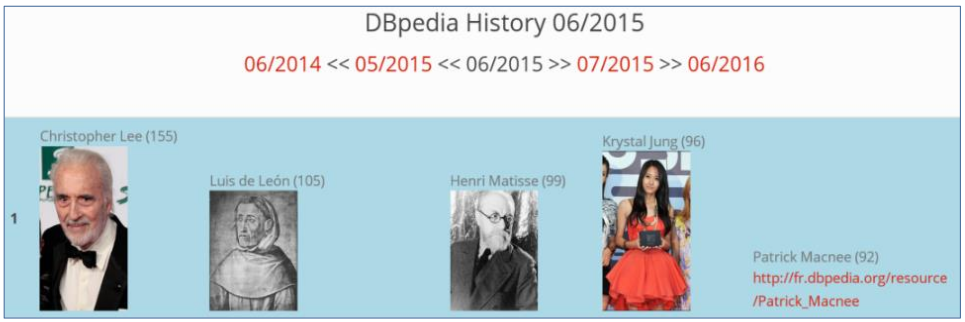

We also designed a new DBpedia extractor materializing the editing history of Wikipedia pages as linked data to support queries and indicators on the history [61], [60]. An example of application supported by this service is showed in figure 1 where we provide a Web portal based on STTL [18] crossing linked data from DBpedia.fr and DBpedia Historic to detect events concerning artists.

Finally, we redesigned the DBpedia.fr Web site with a responsive interface, a modern design and a technical documentation. The Web site is also available in English because internationalizing the document allows a wider audience8 to use the data extracted.

Provoc Ontology from SMILK

Participants : Fabien Gandon, Elena Cabrio.

ProVoc ( http://ns.inria.fr/provoc ) (Product Vocabulary) is a vocabulary that can be used to represent information about Products and manipulate them through the Web. This ontology reflects: the basic hierarchy of a company (Group/Company, Divisions of a Group, Brand names attached to a Division or a Group) and the production of a company (products, ranges of products, attached to a Brand, the composition of a product, packages of products, etc.).